We used to deploy our production Kubernetes clusters using Kubespray and configured them using fluxcd. At the time, this proved to be a good way to manage a few clusters, but as we grew, we had to manage more and more clusters, and Kubespray just wasn’t good enough for this scale.

Fluxcd v1 was used to achieve GitOps functionality for Kubernetes deployments, which worked fine, but ultimately lacked some features we needed, especially when it came to automation and visibility. ArgoCD proved a great fit there and, coupled with some custom controllers, Cluster API and vSphere, had us covered from head to toe.

While Flux allowed us to sync manifest to each cluster, it had to be deployed in the cluster where we wanted to use it. This proved less than ideal for us because if something didn’t work, we had to connect to each cluster and read `fluxcd` logs to see why.

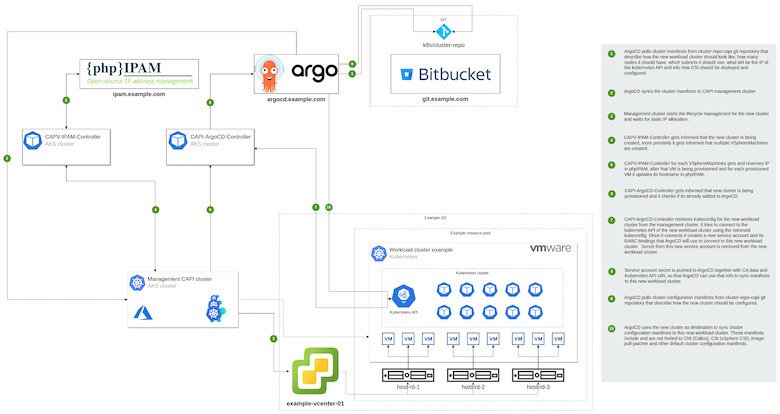

ArgoCD works a bit differently, as it pushes the configuration to the target cluster. This enables us to deploy one ArgoCD per region, and one instance can manage multiple clusters in multiple DCs and domains.

When we investigated ArgoCD, it immediately seemed like a good choice for both Kubernetes deployments and deploying workload clusters. We liked the observability it gave us, and its API was very easy to use.

How it works

With ArgoCD, you can deploy your application via your own ArgoCD Application that holds the information where the manifests for your service are stored and to what cluster those manifests should be synced.

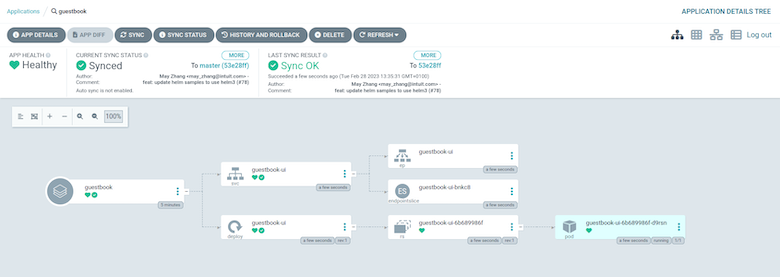

After you add the application to ArgoCD, you get a visual representation of what your application looks like when deployed to Kubernetes. You can see everything that you’ve defined in your manifests. At that point, all resources will have a status as “missing” from the target cluster until you press Sync to push them to the target cluster you specified in your ArgoCD Application.

After syncing, you will have a live visual representation of how your application is scheduled to the Kubernetes cluster and what resources it is using. If you have multiple Kubernetes services, you will also get a diagram showing how traffic is routed for you service.

ArgoCD Application Example

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: guestbook

namespace: argocd

spec:

destination:

namespace: default

server: https://kubernetes.default.svc

project: default

source:

directory:

recurse: true

path: guestbook

repoURL: https://github.com/argoproj/argocd-example-apps

targetRevision: master

Kubernetes has default self-healing mechanisms, and ArgoCD Applications have their own self-healing mechanisms on top of that. This ensures that you service is always up and running. ArgoCD can sync your Application automatically if the actual Application cluster states deviate from the desired state.

Automate the whole thing

When we decided we needed a faster way to deploy Kubernetes clusters, we decided to go with Cluster API from Kubernetes SIG.

It gave us a declarative way to deploy a production-ready Kubernetes cluster. We could have just a few manifests and run `kubectl apply` against the already setup Cluster API management cluster, resulting in a fully deployed workload Kubernetes cluster. But we didn’t want to do this manually.

So we introduced ArgoCD. It enabled us to deploy manifests to the management cluster and observe what happened. This was when we realized what else it could do – it can deploy anything that Kubernetes API can read in the specific way we want it to be deployed. It can provide us with most of the required features, including secret management, logging, and workload state overview.

ArgoCD usage for cluster deployment (automate, automate, automate)

Now, how do we use ArgoCD to deploy production-ready Kubernetes clusters?

As mentioned above, with Cluster API, you just push “some” manifest to the management cluster, creating a working production-ready cluster. But it’s easier said than done.

To create VMs (Virtual Machines) in vSphere, that will be joined in the clusters, you need to have DHCP (Dynamic Host Configuration Protocol) enabled on your network (we don’t). This was the first issue we needed to resolve, and we did it by writing a custom Kubernetes controller that updates VSphereMachines with valid static IPs.

After the VMs are created in vCenter, they are joined into a cluster, but this is still not a ready cluster, as it is missing some of the core components, like the overlay network. This causes the cluster to be in a not-ready state, and all of the nodes in the cluster are tainted.

This process is further automated using ArgoCD API, which we use within our custom Kubernetes controller to create ArgoCD Applications that define cluster-config ArgoCD Application, which in turn contains overlay network configuration and everything else that we specify.

To sync this second ArgoCD Application, we first need to add the workload cluster we have just deployed to the ArgoCD instance. That way, this ArgoCD instance can connect to it and manage it. To do this, we wrote another Kubernetes controller that supports this automation.

The workload cluster will only be added to the ArgoCD instance when its kubeapi-server is up and running. Sync of cluster-config Application happens automatically when we detect that a workload cluster has been added to the ArgoCD instance.

Overlay network and ArgoCD Sync Waves

We decided on Calico with BGP peering for the overlay network (CNI). To deploy the overlay network, we had to use some advanced features of ArgoCD sync. This is due to the fact that we needed to deploy resources in a specific order to deploy the overlay network. Meaning some of the resources are expecting that some other resources have already been deployed.

Luckily this was fairly easy to set up using ArgoCD Sync waves. Using Sync Waves, you can specify the exact order that needs to be followed with BGP. Using ArgoCD, we also added retry for this sync because if there’s a time-out, the sync can end up in a failed state, so this is also something ArgoCD supports natively.

When the overlay network is finally deployed to the workload cluster using a cluster-config Application, we can consider our cluster up and running!

In the end, ArgoCD gave us excellent visibility, a single place to find everything deployed anywhere in Kubernetes. We don’t have to worry whether a workload is really deployed to some cluster. This was very useful for our own Kubernetes controller deployments that support workload cluster deployment automation.

These workloads are system-critical, and in ArgoCD, we have one place to monitor if everything is okay, check logs, and perform the rollout of new versions. The same principle applies to any Kubernetes workload.