Call a conversational AI assistant from ElevenLabs

This tutorial describes how to call a conversational AI assistant from ElevenLabs using the low-code/no-code product Call Link. Call link allows end users to be connected with a predefined destination without the need to develop an end-user application.

This tutorial leverages a GitHub repository to guide you through the process of setting up the call link. The goal of this tutorial is to:

- Improve intuitive voice interaction with conversational AI

- Use voice interactions with the WebSocket protocol

- Use the Calls API platform for WebSocket integration

Use case

Intuitive voice interaction with conversational AI

Consumers are becoming increasingly accustomed to interacting with voice assistants on their smartphones or home devices, such as Amazon Alexa, Google Home, or Apple Siri. With the emergence of advanced conversational AI models with voice chat capabilities, including OpenAI’s ChatGPT and Google Gemini, users expect a similar level of fluid and dynamic voice interaction when contacting businesses and service providers. The old, menu-driven Interactive Voice Response (IVR) systems are gradually becoming obsolete, making way for more natural and intuitive voice interactions.

WebSocket in the context of voice AI communications

WebSocket is one of the most common communication protocols for enabling real-time voice interaction with AI assistants. Unlike traditional HTTP-based communication, which works in a request-response model, WebSocket allows full-duplex communications between a client and a server. This means that both parties can send and receive data simultaneously, making it an ideal protocol for streaming voice interactions.

In voice AI integrations, WebSocket transmits audio data in real time. This lets an AI service, like ElevenLabs (opens in a new tab), process incoming speech, generate responses, and send synthesized voice back to the caller without noticeable delay. This low-latency communication is critical for delivering a seamless and engaging user experience.

Products and channels

Prerequisites

- Infobip account to use the communication channels. If you do not have an account, sign up (opens in a new tab) for an account.

- CPaaS Voice and WebRTC channel on your Infobip account (you can request this channel (opens in a new tab))

- ElevenLabs (opens in a new tab) developer account

- ngrok (opens in a new tab) account

- Knowledge of implementing APIs

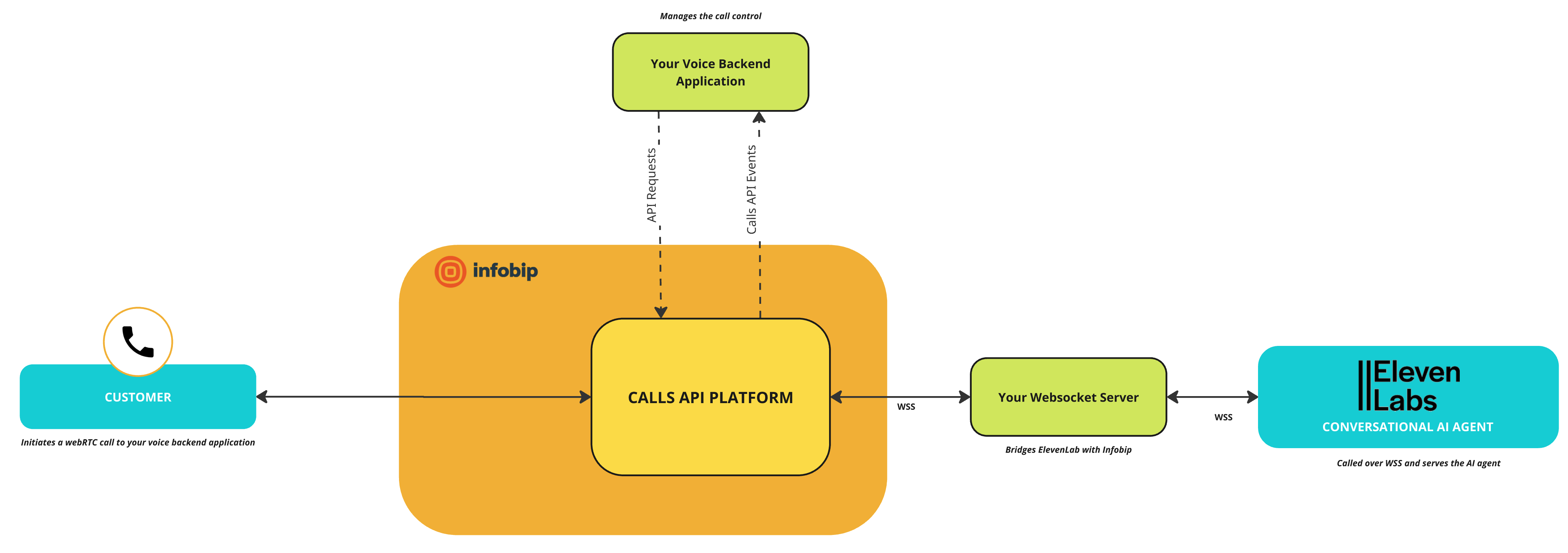

Technical specifications diagram

Process overview

The process leverages the Calls API platform: the WebSocket Endpoint. The WebSocket Endpoint feature on the Calls API platform enables connection to a conversational AI service like ElevenLabs over a phone call, WebRTC, SIP, or even voice-over-the-top (OTT) channels like Viber and WhatsApp.

The WebSocket Endpoint allows developers to establish a direct audio stream between the Voice API platform and an external AI service. This means that it is possible to route voice interactions from callers directly to an AI assistant running on ElevenLabs, effectively transforming any phone call into an intelligent conversation. With this integration, you can offer a more interactive and human-like voice experience to your customers, improving engagement, and supporting automation.

This tutorial describes how to use the Infobip Call Link (no-code/low-code webRTC solution) to connect with an ElevenLabs conversational agent.

To integrate Infobip with an ElevenLabs conversational agent, you need to develop and host two key application servers:

- Voice Backend (Call Control and AI Assistant Integration)

- Manages call control, handling inbound webRTC calls to your Infobip account and connecting them to your AI assistant via WebSocket

- Bridges the two call legs into a seamless conversation over a dialog

- Leverages Infobip’s Calls API methods (opens in a new tab) to perform call-related actions and listens for Calls API events to track the status of these operations

- Proxy Server (Audio Stream Conversion & Buffering)

- Acts as a bridge between Infobip and ElevenLabs, converting audio streams between the two platforms

- Required because Infobip streams raw audio over WebSockets, whereas ElevenLabs requires base64-encoded audio streams, and the proxy handles this conversion in real time

Implementation using GitHub repository

To support this tutorial, you are provided with a GitHub repository that contains the step-by-step instructions to call a conversational AI assistant from ElevenLabs. In addition, it includes the voice back-end application code and websocket proxy application code that you can deploy on your personal computer.

Follow the guidance and instructions, and your application will be up and running quickly. You will be able to talk to your conversational AI assistant over a WebRTC connection. Once you understand how this works, it is straightforward to replace the inbound webRTC call by an inbound phone call or SIP call.

To access the GitHub repository, see Infobip ElevenLabs Conversational Integration tutorial (opens in a new tab).